Every once in a while a website important to you goes down. It’s usually something mundane. Scheduled maintenance, a domain expiring, issues with DNS or some script kiddie taking over your WordPress install because you forgot to update that one WordPress plugin. It’s usually just a small hiccup that you can recover from, over coffee.

This, unfortunately, wasn’t just a hiccup. This was mayday. A word that is not to be used lightly. What happens when you forget to pay your hosting bill and the renewal emails get buried in your inbox? Well, we found out the hard way.

We lost all of ln(exun), permanently, after Bluehost terminated our hosting plan. All those posts dating back 20 years ago all permanently wiped.

Since I’m an alumni, I no longer managed the site actively. I was absolutely shocked and taken aback that all of it just got wiped. Mistakes happen, but right now it was time to salvage whatever we could. But the grief was real: all competition wins, underscore articles, the random stuff posted from 2005 – it was all gone.

Was this the end of the Natural Log of Exun?

• • • • •

Enter “backups”

I vaguely remembered that sometime back in the day I took an XML backup of lnexun’s WordPress. For the record, I have a practice of never deleting anything from my laptop. Instead, I believe in having a larger trash can and that’s how I now have a 2TB Mac which is also full 🫠

For context, WordPress backs up only text content in an XML file with the format “<site>.wordpress.<date>.xml” when you export it from their dashboard. I searched for that exact filename and I was blessed to be able to find a shiny 5.9 MB file called “lnexun.wordpress.2017-05-06.xml” that I backed up on May 6, 2017.

This was a very solid headstart. We have all posts until 2017, but the restoration isn’t complete. At this point we’re looking to restore all images uploaded to ln(exun) along with all posts after 2017. The goal is to get to 100% restoration – as much as we can push it.

Going way back via a different medium

You guessed it, we’re going wayyyyback. But before that, I recalled that we ported our site to Medium in 2018 temporarily as an experiment. This meant we had all posts until April 2018 along with all images until that date.

Thanks to GDPR, I was able to download my entire Medium account dump. This also included the ln(exun) medium publication which had all the posts in HTML.

Every post was its own HTML file in the posts folder

Looking at the HTML file, here is what an image tag looked like in all these HTML files.

<img class="graf-image" data-image-id="0*fycT_sxDuHbisPuV.jpg" data-width="1024" data-height="759" data-external-src="http://www.lnexun.com/wp-content/uploads/2015/10/Dynamix-Overall-1024x759.jpg" alt="Dynamix Overall" src="https://cdn-images-1.medium.com/max/800/0*fycT_sxDuHbisPuV.jpg">Focusing on two very key attributes here, we have the src attribute which hosts it on the Medium CDN but most importantly we have the data-external-src attribute which tells me where that image was placed exactly at the time of export.

For context, if we can just put the correct images in the wp-content/uploads/<year>/<month>/<image_name> path we can retroactively have every single image working in every single post.

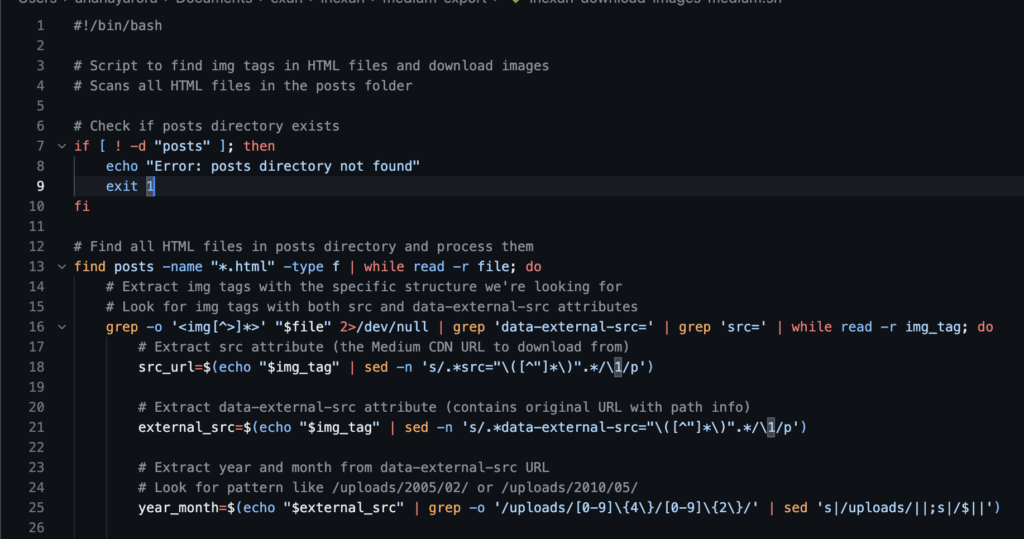

This was absolutely perfect. To sum it up we now have the images from Medium CDN’s and also the path where have to place them. It was now time to bust out Cursor and write that shell script to automatically scan for these tags, get the correct attributes, download the images and place them in the correct folder

Amazing. We now have all the images until 2018 🎉

Actually going back to 2018

Now that we have all images until 2018 and posts until 2017, we can now get the rest. I didn’t want to export the remaining ~8 months of posts from the Medium between 2017 and 2018, since we’re actually going wayback here (the moment we’ve been waiting for) and we can get the rest in one pass.

The problem with the Wayback Machine is that every request is painfully slow, and there is no guarantee if we’ll find all the posts or not – but we’ll try our best. Internet Archive is always the last option.

To start off, we first need to know what times did the Wayback Machine index ln(exun). The wayback machine has the CDX API. CDX is a special index format and API that the Wayback system uses to list and look up archived captures of web pages.

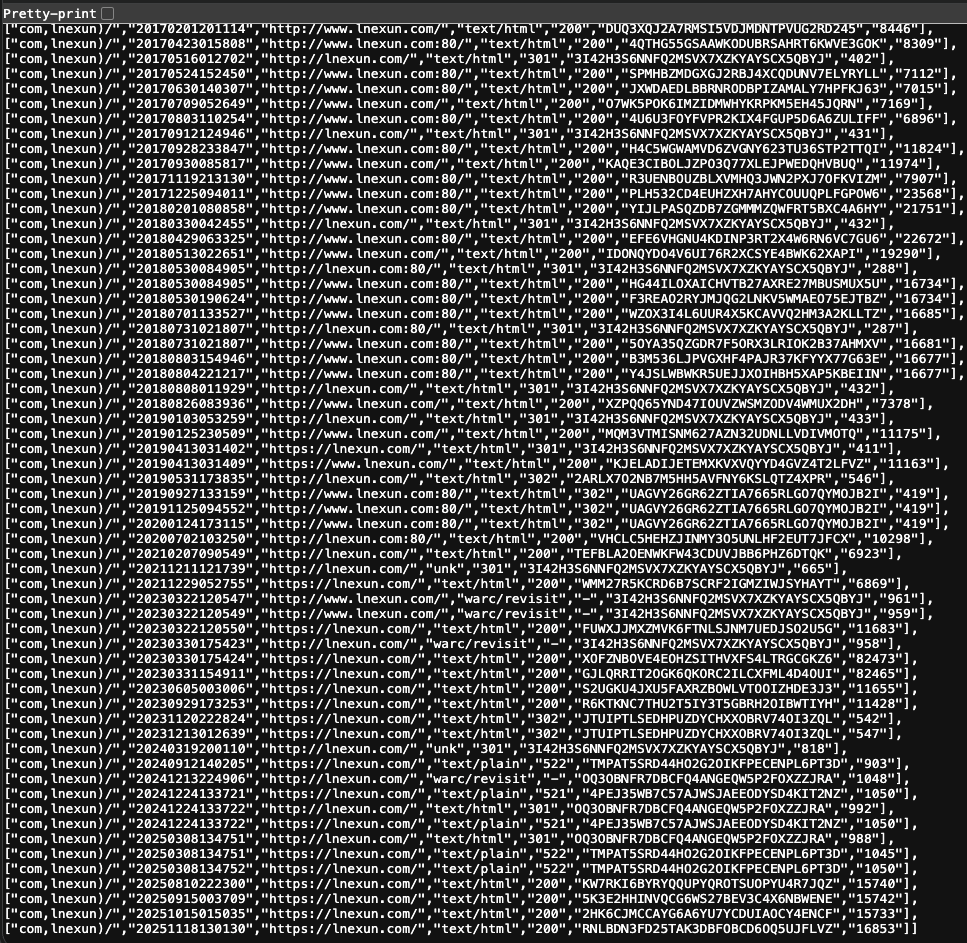

Requesting ln(exun)’s CDX entries we see a bunch of times Wayback indexed ln(exun), in a not-so-neat JSON response.

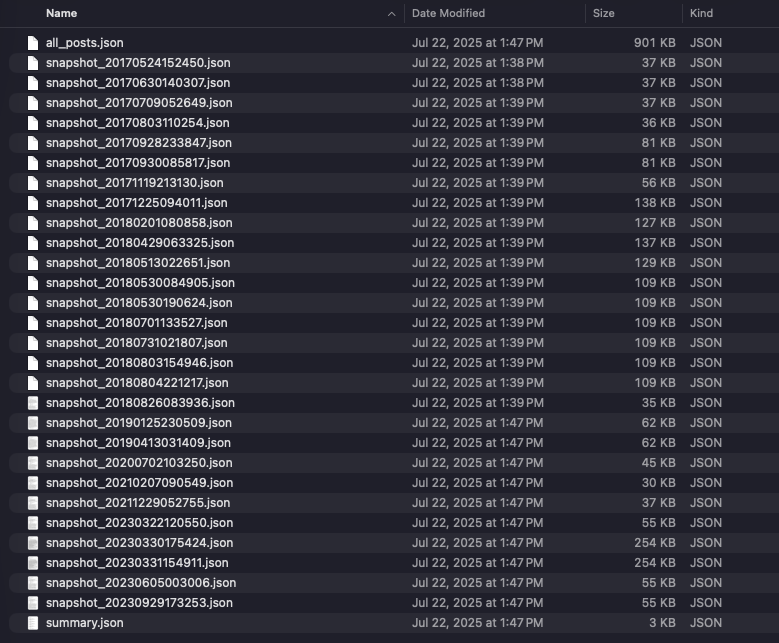

Great, now that we have all the times wayback scraped ln(exun), we can now begin individually requesting for snapshots after 2017. Time to bust out Cursor, and vibecode some scripts to scrape all these timestamps. By the end, I had all individual snapshots, a JSON with all posts and a summary.json (with the summary of everything scraped).

All these were consolidated properly into a nice lnexun_posts_import.xml file that could be imported into WordPress for all the remaining posts until end of 2022. Post that, all other posts were in blogger, which was a very simple export and import.

Amazing. We now have all the posts. 🙌

Now for the last piece, we just need the images from 2017 onwards. Wayback had them, so it was easy to just simply download the remaining images from the posts. Time to break out Cursor one last time and write a script to download all the remaining images and have them placed in the right folder.

🎉 Success! We have officially recovered all of ln(exun) at this point 🎉

This wasn’t a straight path forward, rather quite rocky. There was a lot of planning that went into this, lots of careful coordination and review making sure everything is perfectly pieced together.

There was also one failed attempt at using RSS feeds to get back everything, but RSS feeds didn’t have everything in them. All the authors were missing from these posts too, or atleast not mapped correctly to the posts when imported. More scripts were written for that as well and also required little bit of manual work.

I’m also genuinely impressed at how much time Cursor saved me here because writing those scripts could’ve taken me hours, it really did all the heavy lifting for me making the scraping a breeze.

Since there is no sensitive information here, I’ve gone ahead and open sourced all scripts and outputs if someone really wants to mess around with them.

Conclusion

This felt like Sudocrypt. Piecing together things, jumping through hurdles and getting to the answer, except this was more like hunting and piecing together back our natural log from all the fragments we could find. While I enjoyed doing this a lot and it taught me quite a bunch of stuff, this is something that should not happen again.

We recognize that current Exun members and faculty are busy with school and things like these can slip easily. Keeping this in mind, Exun Alumni Network (EAN) has taken over the responsibility to maintain lnexun.com. With our expertise and resources, we will be ensuring that lnexun stays online keeping our legacy alive.

A huge shoutout to Bharat Kashyap (President, Exun 2015) for setting up the hosting for ln(exun) 🙏

Signing off,

Ananay Arora

President, Exun Class of 2017